A team led by researchers at Northwestern University in Chicago has developed the first AI robot to date that can intelligently design robots from scratch. The robot itself is unassuming – small, squishy, and misshapen. And, for now, it is made of inorganic materials. But the developers say it represents the first step in a new era of AI-designed tools/

To test it, the researchers gave the system a simple prompt: Design a robot that can walk across a flat surface. While it took nature billions of years to evolve the first walking species, the new algorithm compressed evolution to lightning speed – designing a successfully walking robot in mere seconds.

The study was published in the latest issue of the journal Proceedings of the National Academy of Sciences under the title “Efficient automatic design of robots.”

“We discovered a very fast. AI-driven design algorithm that bypasses the traffic jams of evolution without falling back on the bias of human designers,” said computer science, mechanical, chemical and biological engineering Prof. Sam Kriegman at Northwestern’s McCormick School of Engineering. Kriegman led the work with David Matthews, a scientist in his lab. They worked closely with co-authors Andrew Spielberg and Daniela Rus from the Massachusetts Institute of Technology and Josh Bongard from the University of Vermont for several years before their breakthrough discovery.

'We can watch evolution in action'

“Now anyone can watch evolution in action as AI generates better and better robot bodies in real-time,” Kriegman said. “We told the AI that we wanted a robot that could walk across land. Then we simply pressed a button and presto! It generated a blueprint for a robot in the blink of an eye that looks nothing like any animal that has ever walked the earth. I call this process ‘instant evolution.’ ”

About three years ago, Kriegman garnered widespread media attention for developing xenobots – the first living robots made entirely from biological cells. Xenobots, named after the African clawed frog (Xenopus laevis), are synthetic lifeforms that are designed by computers to perform some desired function and built by combining together different biological tissues. Whether xenobots are robots, organisms, or something else entirely remains a subject of debate among scientists.

The first xenobots were built by Douglas Blackiston according to blueprints generated by Kriegman’s AI program. They are small enough to travel inside human bodies, can walk and swim, and survive for weeks without food.

So far, xenobots have been less than a millimeter wide and composed of just two things – skin cells and heart muscle cells, both of which are derived from stem cells harvested from early blastula stage of frog embryos. They are being used in medical and pharmaceutical research to evaluate novel dosage forms. Now, Kriegman and his team view their new AI as the next advance in their quest to explore the potential of artificial life.

While the AI program can start with any prompt, the scientists began with a simple request to design a physical machine capable of walking on land. That’s where the researchers’ input ended and the AI took over.

The computer started with a block about the size of a bar of soap. It could jiggle but definitely not walk. Knowing that it had not yet achieved its goal, AI quickly iterated on the design. With each iteration, the AI assessed its design, identified flaws and whittled away at the simulated block to update its structure. Eventually, the simulated robot could bounce in place, then hop forward and then shuffle. Finally, after just nine tries, it generated a robot that could walk half its body length per second – about half the speed of an average human stride. The entire design process – from a shapeless block with zero movement to a full-on walking robot – took just 26 seconds on a laptop.

“Now anyone can watch evolution in action as AI generates better and better robot bodies in real-time,” Kriegman said. “Evolving robots previously required weeks of trial and error on a supercomputer, and of course, before any animals could run, swim or fly around our world, there were billions upon billions of years of trial and error. This is because evolution has no foresight. It cannot see into the future to know if a specific mutation will be beneficial or catastrophic. We found a way to remove this blindfold, thereby compressing billions of years of evolution into an instant. When people look at this robot, they might see a useless gadget,” Kriegman said. “I see the birth of a brand-new organism.”

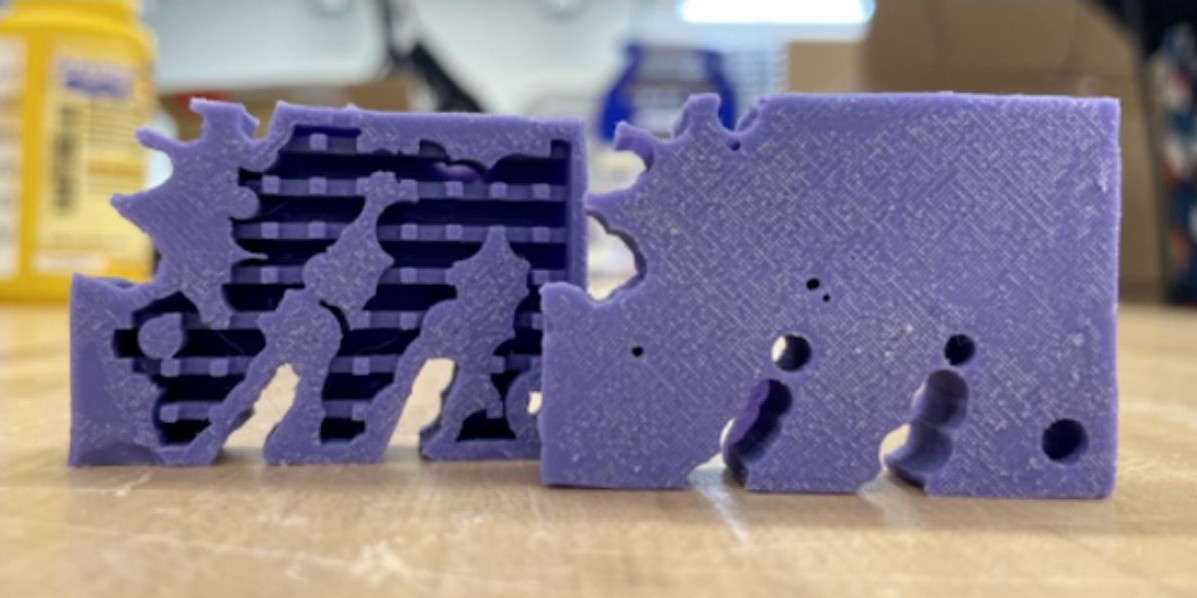

All on its own, AI surprisingly came up with the same solution for walking as nature – legs. But unlike nature’s decidedly symmetrical designs, AI took a different approach. The resulting robot has three legs, fins along its back and a flat face, and it’s riddled with holes.

“It’s interesting because we didn’t tell the AI that a robot should have legs,” Kriegman said. “It rediscovered that legs are a good way to move around on land. Legged locomotion is, in fact, the most efficient form of terrestrial movement.”

To see if the simulated robot could work in real life, Kriegman and his team used the AI-designed robot as a blueprint. First, they 3D printed a mold of the negative space around the robot’s body. Then, they filled the mold with liquid silicone rubber and let it cure for a couple of hours. When the team popped the solidified silicone out of the mold, it was squishy and flexible. To see if the robot’s simulated behavior – walking – was retained in the physical world, the team filled the rubber robot body with air, making its three legs expand. When the air deflated from the robot’s body, the legs contracted. By continually pumping air into the robot, it repeatedly expanded and then contracted — causing slow but steady locomotion.

While the evolution of legs makes sense, the holes are a curious addition. AI punched holes throughout the robot’s body in seemingly random places. Kriegman hypothesizes that porosity removes weight and adds flexibility, enabling the robot to bend its legs for walking. “We don’t really know what these holes do, but we know that they are important,” he said. “Because when we take them away, the robot either can’t walk anymore or can’t walk as well.”

Kriegman noted that most human-designed robots either look like humans, dogs, or hockey pucks. “When humans design robots, we tend to design them to look like familiar objects,” he said. “But AI can create new possibilities and new paths forward that humans have never even considered. It could help us think and dream differently. And this might help us solve some of the most difficult problems we face.”

Although the AI’s first robot can do little more than shuffle forward, Kriegman imagines a world of possibilities for tools designed by the same program. Someday, similar robots might be able to navigate the rubble of a collapsed building, following thermal and vibrational signatures to search for trapped people and animals, or they might traverse sewer systems to diagnose problems, unclog pipes, and repair damage. The AI also might be able to design nano-robots that enter the human body and steer through the bloodstream to unclog arteries, diagnose illnesses or kill cancer cells,” he concluded. “The only thing standing in our way of these new tools and therapies is that we have no idea how to design them,” Kriegman said. “Lucky for us, AI has ideas of its own.”